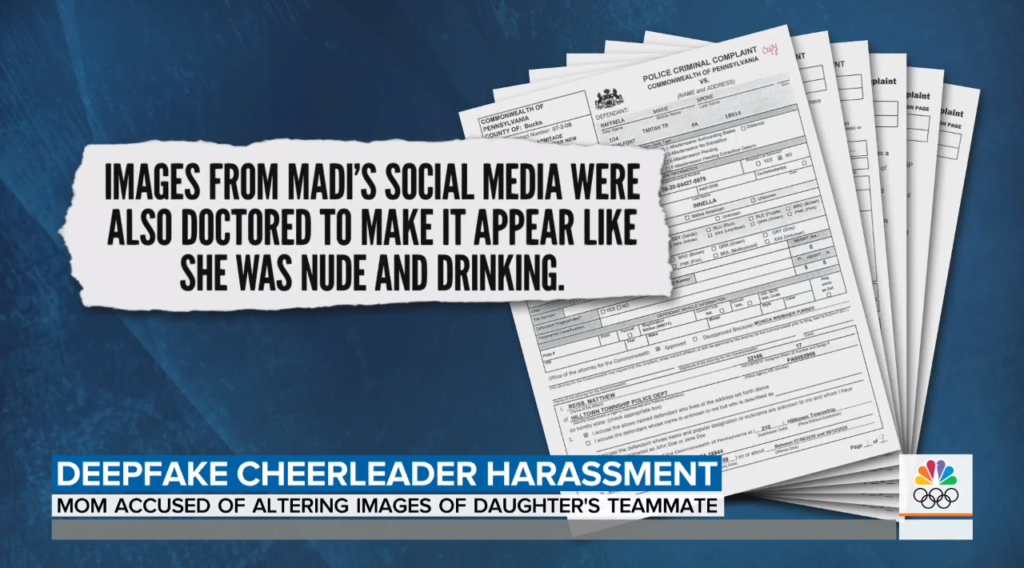

A few days ago, a unique cyberbullying situation came to light involving a 50-year-old woman who targeted teenage girls in her Bucks County, Pennsylvania community last summer. The most interesting twist was not the vast age difference between the aggressor and the targets, but that fact that software was used to alter original images found online to make it seem like the other girls – who belonged to a cheerleading club her daughter previously attended – were nude, engaged in underage drinking, and/or using vaping products (prohibited by the gym). These were spread via harassing text messages from anonymous phone numbers unrecognizable to the girls, and also included a suggestion that one of the girls kill herself.

The term “deepfake” (“deep learning + fake”) itself seemed to have originated on Reddit back in 2017 when a number of users began sharing their creations with each other. Generally speaking, it involves the use of artificial intelligence (AI) (rooted in machine learning techniques) to create incredibly realistic-looking content intended to come across as legitimate and real. Often, algorithmic models are created to analyze significant amounts of content (e.g., hours of video of a person, thousands of pictures of a person – with specific attention to key facial features and body language/position), and then take what is learned and apply it to images/frames one might want to manipulate (e.g., superimposing those original features onto the target content/output). Additional techniques such as adding artifacts (like glitching/jittering that appears normal or incidental) or using masking/editing to improve realism are also employed.

The technology has come a long way over the last few years. Many of our readers might remember FakeApp – which allowed a user to swap faces with another person in videos they create – and FaceApp – which did the same but also had a filter that showed you what you’ll look like as you age. Another app called Zao was popular for a while; it allowed you to take a single picture of yours and put your face on TV or movie clips. As you might imagine, creating these types of pictures and videos has become a pastime for many; numerous notable individuals like Barack Obama, Nicolas Cage, and Tom Cruise (which just went viral this month) have been featured largely to experiment with the technology and get a laugh, like, and/or share from others. But the longest shadow cast on deepfake technology is because of its use in propagating misinformation and disinformation in politics. That specific problem has forced the major social media and search companies to create policies prohibiting certain types of deepfakes to keep their platforms from becoming a breeding ground for dangerous falsehoods.

Back to the case in Pennsylvania: the woman was identified because an IP address from one of her devices was linked to the phone numbers she was using to target the teenage girls, and timestamps on texts sent from the seized phones matched up with what was found on the girls’ devices. This type of digital forensics is rudimentary in this day and age, and hopefully law enforcement personnel in every community feel like they can adequately respond to these types of cases should they increase in number. If not, they need to be better resourced and trained.

Also in terms of a formal response, it is essential that school administrators and other decision-makers remember the axiom that often, there is more than meets the eye. All of us are so used to quickly consuming digital content and then reflexively internalizing it as truth (especially if it confirms what we already believe or want to believe). This, coupled with availability bias (where we align our interpretations based on the information we have available to us – or, frankly, that is inundating us) and argumentum ad populum (where something seems to be true because so many of our friends on social media seem to believe it is true), often hinders our ability to carefully and objectively evaluate what is in front of us. Deepfakes are being used in cyberbullying situations. If a student appears to be in a compromising photo or video, we need to be absolutely sure the content has not been manipulated before exacting a disciplinary response. Such a determination must formally be built into the investigative process. Knee-jerk assumptions risk a lawsuit and, more critically, they can compound the pain experienced by the victim(s).

This particular case came up in my undergraduate course this week, which means that the eyes of at least some university students have been opened to the possibility of creating a deepfake to harass another person. I’m sure that this case has also come across the radars of middle and high schoolers as well, and as such we may see a few more of these situations crop up. Software to do this is easily accessible with a simple Google search. Just like it would be foolish to believe deepfakes won’t be used to trick people into swallowing untruths before the next US election, it is foolish to believe that our students (and some adults) won’t use them to harm others.

Deepfakes have the potential to compromise the well-being of a nation when it comes to democracy and national security, and also have the potential to compromise the well-being of a target when one considers the emotional, psychological, and reputational damage they can inflict. However, the thing with deepfakes is that there are always aural, visual, and temporal inconsistencies to be detected. Those irregularities may miss observation by the human eye, but machine learning can be enlisted to identify and flag any non-uniformity. It has been said that AI can fix what AI has broken – I love that. All social media companies (as well as national governments) must continue to invest in improving their detection technologies because the algorithms to create increasingly realistic deepfakes are constantly being refined as well. We may also need to eventually integrate blockchain in the creation of any piece of digital content so that we can verify that each keeps its integrity from the time it was first created all the way through its distribution across the Web. This will be a fascinating area of study to follow, and I am excited to see what unfolds. Of course, we’ll keep you updated on the most important developments.

Image sources: https://www.today.com/news/cheerleader-s-mom-accused-using-deepfakes-harass-girl-team-t211737 – Story by Maura Hohman, Today Show

The post Deepfakes and Cyberbullying appeared first on Cyberbullying Research Center.