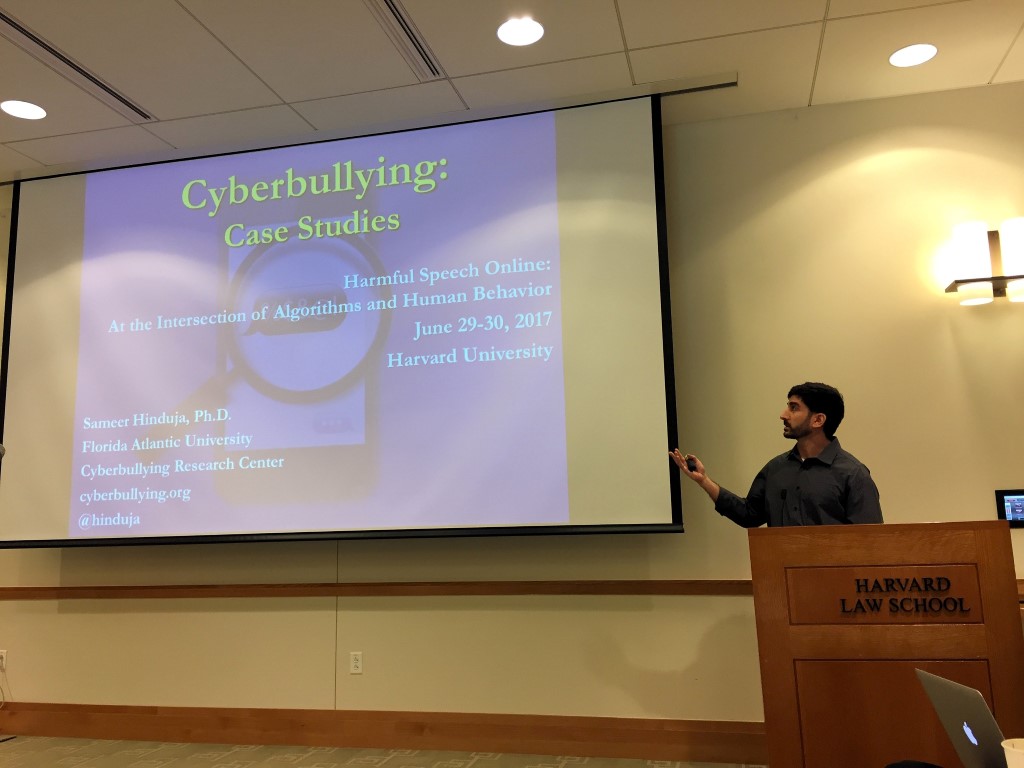

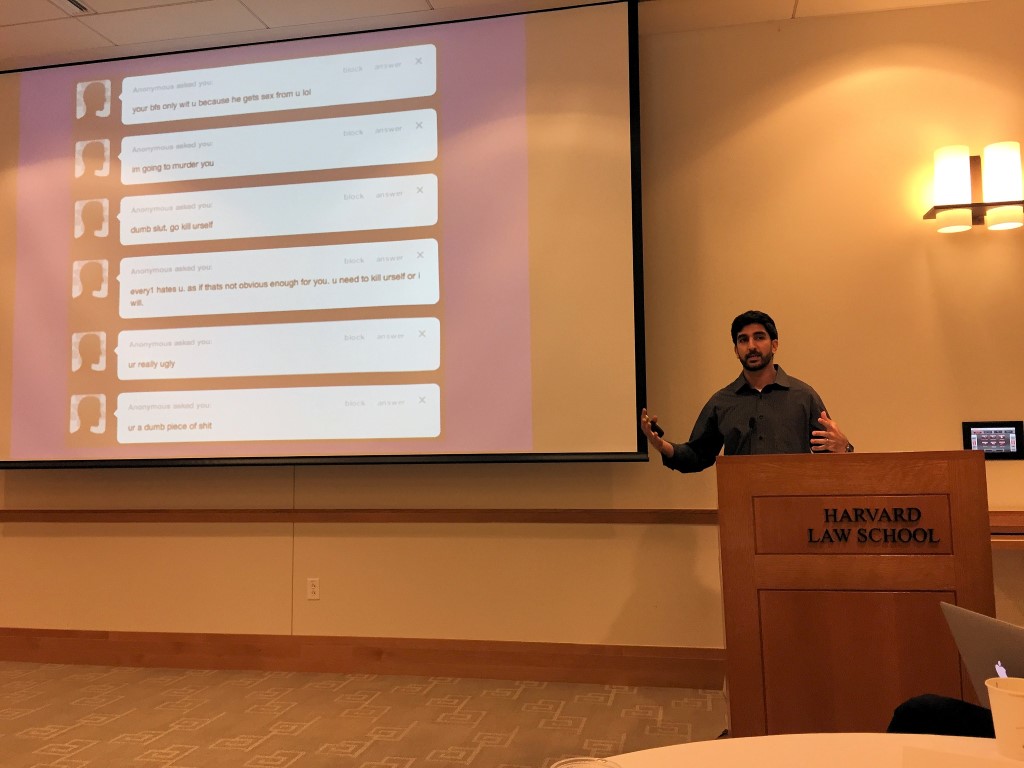

Last week, I attended a workshop at Harvard entitled “Harmful Speech Online: At the Intersection of Algorithms and Human Behavior.” Sponsored and organized by the Berkman Klein Center for Internet & Society, Institute for Strategic Dialogue, and Shorenstein Center for Media, Politics and Public Policy, its goal was to “broadly explore harmful speech online as it is affected by the intersection of algorithms and humans, and to create networks and relationships among stakeholders at this nexus.” My specific role was to discuss some cyberbullying case studies and stimulate conversation around how algorithms can not only automatically identify harmful speech and prevent its ill effects, but also suggest how machine learning can programmatically induce positive – or at least neutral – behavior and participation online.

I thought it would be helpful to summarize some take home points from the workshop in the hopes that it would get our readers thinking about how technology can be marshaled to make the Internet a kinder, safer place. You may not know how to program a computer to perform a certain task, but I bet you have macro-level ideas on what could be done. And I believe that in time, whatever we conceive will be attainable. I mean, think of how far we have come already in terms of facial recognition, hypertargeted ads, and customized experiences on social media platforms – all through algorithms that have been “taught” how to make future decisions based on previous decisions. The future is exciting!

Before continuing, do read my primer on how machine learning can help us combat online abuse – which I hope spells out in layperson’s terms what all of this means. Also, the meeting was held under Chatham House Rule to encourage openness and candor. This states that: participants are free to use the information received, but neither the identity nor the affiliation of the speaker(s), nor that of any other participant, may be revealed. So please keep that in mind as you consider the following observations.

Social Media Companies: Policies, Perspectives, and Role

• Social media companies are in an unenviably tough position. Users don’t really want to hear that, but it’s true. They are not fans of abusive language or content on their sites, but have to be careful about censorship and not sparking outcries from users who expect to freely express themselves on the platform. Doing too much and doing too little in terms of controlling online expressions both introduce backlash. They state that they do not have enough human workers who do content moderation, and those they have are completely overwhelmed with abuse reports, and also sometimes make the wrong decision about allowing or disallowing the content because they don’t know the full story behind the ostensible harm.

• Social media companies also argue that they must have a global policy because they are global platforms where users interact across national and cultural boundaries, but acknowledge their policies are imperfect due to varying cultural perspectives about harm, violence, abuse, hate, shame, sex, and other complex issues. This begs the question: if harm is socially-constructed and therefore dissimilar in different regional contexts (“hyperlocalized”), how can they still have (and apply) a universal policy? Should policies about revenge porn, cyberbullying, extremist content, and the like vary based on the part of the world in which they are to be applied? Is this even possible? Here’s an example from India: a picture of you involved in sexual activity while fully clothed could be posted by someone out to harm you, but it wouldn’t get taken down in India because it would not be classified as “revenge porn” because of the lack of nudity.

• The First Amendment is often used as a reason why social media companies or other third-party providers cannot or do not expediently deal with cyberbullying, hate speech, or other harmful expressions online. Apart from guaranteeing freedom of speech, though, the first Amendment also guarantees freedom of assembly. You have a right to assemble (i.e., go and remain and participate) online, but that is undermined because online abuse allowed under the guise of freedom of speech ruins your experience and compels you to leave and stay offline. However, it’s important to point out (from Justin’s and my perspective) that the Constitution of the United States says nothing about what a private company can or cannot do about free speech and free assembly. As such, the First Amendment (which concerns governmental activities) is not relevant.

• Social media companies need to accept they are in the business of human social relationships, and staff up accordingly. They have the financial wherewithal to hire more moderators, improve technological solutions to online harm, and devote more resources to these issues, but they do not – or they do not do enough. This is seemingly because they believe monies are better spent in efforts to monetize engagement. To be sure, they are under pressure from stockholders to perform better and better, quarter after quarter. However, we would argue that profits would naturally increase if companies created safer environments where users felt continually comfortable engaging with others.

Algorithms: The good, the bad, and the neutral?

• Algorithms can help us to identify trends and patterns more easily. For example, there’s clearly a correlation between online and offline harm (we have found the same in our own research with offline and online bullying). Specific to a project involving anti-Islamic tweets, it appeared that whenever certain firestorm anti-Islamic events occurred in the world, there co-occurred a spike of hateful tweets against Muslims – and the researchers could watch this trend unfold and measure the covariation. With this example in mind, it’s likely that future bursts of hateful activity against individuals or populations may portend real-life targeting and victimizing of those same people – and can prompt us to galvanize protective agents and resources.

• Certain algorithms on the sites we frequent seem to be meant to facilitate user engagement that draws a person to the edges of who they are. For instance, conference speakers shared that if you start to watch a somewhat violent video clip on YouTube, the Recommended Videos will offer up recommendations that may draw you into more and more extreme videos of violence. If you are watching politically liberal videos, you will be recommended more and more left-leaning videos – which you . Social media companies benefit from creating a rabbit hole experience where you are sucked deeper and deeper into it – and consequently spend more and more time on the site or app. Not only is this unhealthy, but it promotes dogmatic, one-sided individuals who are never exposed to disparate views.

• Algorithms sometimes unwittingly elevate harmful speech online simply because that speech is controversial and attracts the most interest and attention from others in the form of engagement (likes, comments, shares, retweets). Similarly, abusive expressions from social media influencers (e.g., those with tens of thousands of followers) tend to be seen more often (thereby extending the radius of harm), simply because they are influencers and have obtained more clout on the platform. This needs to be addressed.

• Algorithms are not neutral. They don’t blindly and objectively evaluate content and answer the questions of “is this bullying?” or “is this abusive?” but are informed by the data that has “taught” them how to make those decisions. Those data are sourced from humans who possess race-, sex-, and class-based biases. The point is that algorithms may possibly favor or endorse one side or perspective over another, and thereby may hand additional power to the powerful.

Here’s what I took away from the meeting. Algorithms are here to stay, and increasingly will be used to get users to do certain things on social media platforms. Resources should be spent not just on growing userbases and length of engagement, but also on creating and maintaining safe spaces in which everyone can interact. Machine learning can help with this, and we should put it to work. When we do, though, we need to understand its limitations so that we don’t assume that technological solutions are a panacea. Just like we learned with blocking and filtering tactics, they will not be enough. As such, we need to further study and better understand how power, privilege, stereotypes, and misguided assumptions shape our perceptions and experiences of online harm and abuse.

Image source:

http://news.mit.edu/sites/mit.edu.newsoffice/files/images/hands_devices_740.jpg

The post Harmful Speech Online: At the Intersection of Algorithms and Human Behavior appeared first on Cyberbullying Research Center.